A recent appearance by Mark Zuckerberg on the Joe Rogan podcast provides a great explanation of how classifiers, confidence levels, and precision work with machine learning algorithms. He also explained how adjusting trust can impact the amount of collateral damage caused when updates are rolled out. And if you replace “social” with “search,” Zuckerberg could be talking about a major update to Google’s algorithm.

I recently listened to the Joe Rogan podcast with Mark Zuckerberg, CEO of Meta, to hear Mark’s thoughts on AI, augmented reality, the future of social media, etc. Although Mark discussed topics related to social media extensively, there was one part that particularly stood out to me from an SEO perspective. Mark talked about building systems to identify harmful content, building classifiers to identify that content, and then levels of precision (and how that can impact collateral damage).

He explained how this works clearly and succinctly, and I immediately thought it could help website owners better understand how machine learning classifiers work for search. And from Google’s perspective, it could help site owners and SEOs understand why some sites affected by major algorithm updates probably shouldn’t be affected.

Over time, there have been some famously punitive Google algorithm updates that used classifiers to identify sites that were problematic from a quality perspective. And yes, there was collateral damage along the way. Understanding how classifiers work in machine learning highlights the challenge for Google to algorithmically catch bad actors while highlighting the frightening reality of collateral damage. And this collateral damage can be devastating for affected website owners. I’ll cover more about this later in this post.

First, I’m providing Mark’s video clip below. Then I’ll explain how this impacts SEO, Google’s algorithm updates, and some of the most famous punitive algorithm updates Google has released over time. I’ll also explain how this relates to Google’s extensive core updates, which typically roll out four to five times per year and can have a big impact on search visibility across all sites.

The video clip: Classifiers, confidence levels and collateral damage.

Below, at 30:45 in the video, Mark discusses the challenges of building systems and classifiers to target dangerous content. For website owners and SEOs out there, you can swap “dangerous content” for any type of quality issue that Google wants to address from a machine learning perspective. For example, “unhelpful content” or “spam content”.

How this affects SEO and Google algorithm updates.

As you can see from Mark’s comments, there is always a battle between trust levels and collateral damage. For example, if you have a 99% confidence level on a classifier, you may only be removing a small percentage of low-quality content (or whatever problem you’re targeting). However, if you lower the confidence level to, say, 90%, you may be able to catch 60% of low-quality content, but there will be a lot more collateral damage.

And even at 90% confidence, that means 10% of reported sites (and potentially affected by an algorithm update) could represent collateral damage. And if you multiply that across all sites on the web or within a category that could be targeted, that could result in many sites being affected by an algorithm update that may not be a problem.

If you think about big algorithm updates of the past like Panda, Penguin, Product Reviews Updates (PRU), and the Helpful Content Update (HCU), they all used classifiers. Google’s Pirate algorithm also uses a classifier and, as far as we know, this update is still running regularly. While we don’t know the level of trust Google has demonstrated over time with these systems and updates, there have clearly been instances of collateral damage.

The most recent example of this is the helpful September 2023 content update, or what I call HCU(X). Google even told some YouTubers who were severely affected by this update that there was nothing wrong with their sites. Note that Google is not saying that ALL of the sites affected by the helpful September 2023 content update were fine, only that some were collateral damage.

Then, with the March 2024 comprehensive core update, Google integrated the HCU into its core ranking systems, removed the classifier, and declared that multiple core systems now evaluate the usefulness of content. Unfortunately, many of these sites impacted by the HCU(X) in September 2023 have still not recovered significantly (or have recovered over a longer period of time). Some rose sharply during major core updates, only to decline again with subsequent algorithm updates.

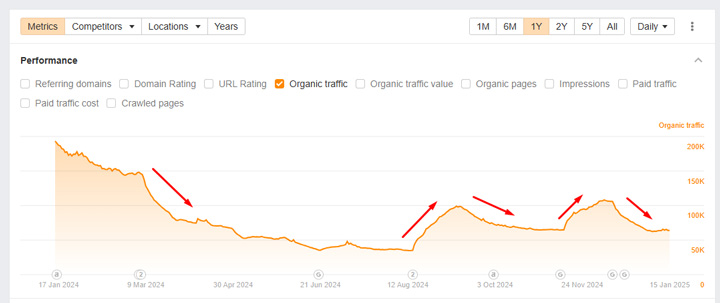

For example, here’s a site that was heavily impacted by the HCU(X) action in September, which rose sharply during subsequent core updates, only to fall back a bit.

The first screenshot shows a decrease with the March 2024 core update, an increase with the August core update, and then a decrease again. Then it rises sharply again with the November core update, only to fall again with the December core update. But that doesn’t even give you the full picture. Take a look at the next screenshot…

The next screenshot shows the true impact of September’s helpful content update. There was a massive decline in HCU(X) in September and then even more in March 2024, and then the rises and falls described above. Needless to say, it’s not pretty…

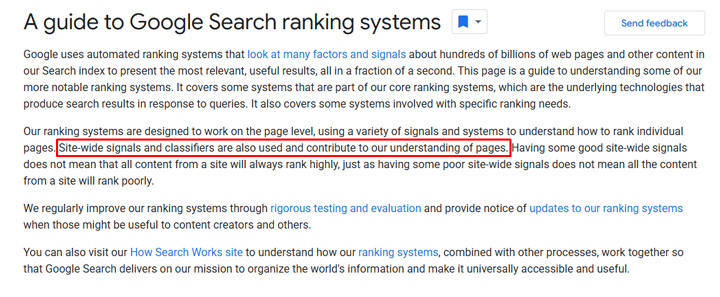

Google also explains in its Guide to Search Ranking Systems that “site-wide signals and classifiers“are used to and contribute to our understanding of pages.” So there could be any number of classifiers used in Google’s core ranking systems that attempt to target a particular problem or condition. Therefore, adjusting confidence levels for classifiers could lead to collateral damage in large-scale core updates as well as in certain large algorithm updates such as review updates, Pirate, the HCU of the past, Penguin of the past, etc. For core updates, there are many systems that work together, so adjusting the confidence level of a classifier may not be as important as one of the specific algorithm updates mentioned above. However, you never know how powerful one of these classifiers is…

Yo-Yo Trends and Confidence Levels:

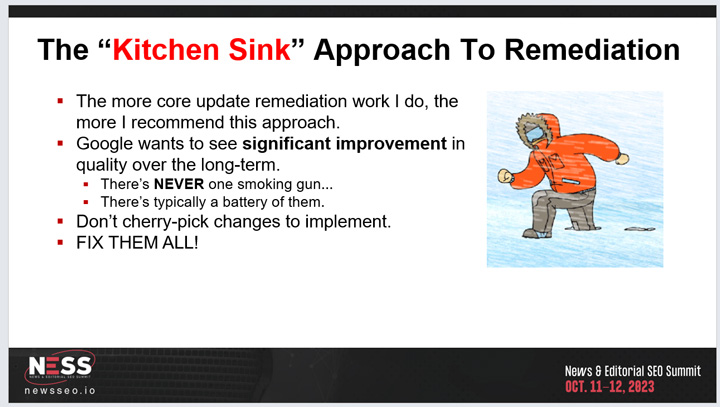

There are many examples of websites rising and falling sharply over time during major core updates, and some of these could well be due to Google tinkering with classifiers and confidence levels. When it comes to Google’s major core updates, I’ve always stated that site owners should move well out of the gray area when it comes to “quality,” and this is one of the reasons why.

If you’re at the edge in terms of quality (in the gray area) and Google is tinkering with the confidence levels of multiple classifiers used in its core ranking system, you could see a sharp increase with one update and a sharp decline with the next. And as long as a site remains in the gray area in terms of quality, yo-yo trends may continue to occur over time as Google adjusts the confidence levels of multiple classifiers.

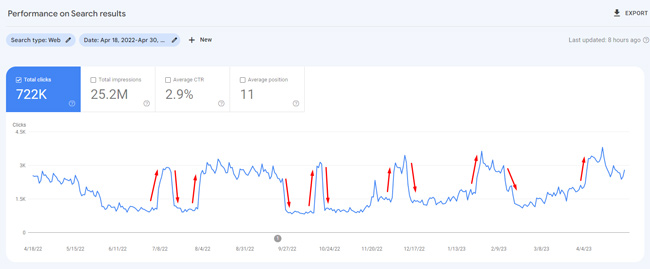

Here’s a good example of a site that spikes and crashes like crazy with various updates, including product review updates and major core updates (and then even unconfirmed updates). Talk about yo-yo trends…

There are of course other reasons why this type of trend could occur, but it makes perfect sense that a change in the confidence levels of classifiers could cause a lot of volatility for sites that are about to be flagged by the classifier. And that can definitely lead to yo-yo trends over time. This is just another reason to get out of the gray area of Google algorithms in terms of quality. For this reason, I have always recommended significantly improving quality over time, using the “kitchen sink” approach to remediation. Do not select any changes. Instead, fix as many issues as possible that could impact Google’s quality rating. This is the best path forward based on my experience supporting many companies that have been negatively impacted by major algorithm updates.

The future of comprehensive core updates is… no comprehensive core updates.

On the subject of major core updates, yo-yo trends, and collateral damage, I’ve been saying for some time that the future of major core updates is… not major core updates. I believe we will see a time when Google’s core ranking system can be updated and upgraded regularly and continuously, without having to set a specific date for the updates to roll out. When that happens, sites are unlikely to see a massive drop on any given day (or during a relatively short launch). Instead, the website may become unavailable over time due to frequent updates to various systems.

On the one hand, site owners should technically be able to reverse this trend more quickly than waiting for subsequent major core updates to roll out, but it also may not be immediately obvious what will happen if they are discontinued. But this also stops website owners from shouting loudly when a major core update comes out. And Google would love nothing more than to stop that from happening. This looks bad for Google, it always ends up in the digital marketing news, but sometimes it also ends up in the mainstream news.

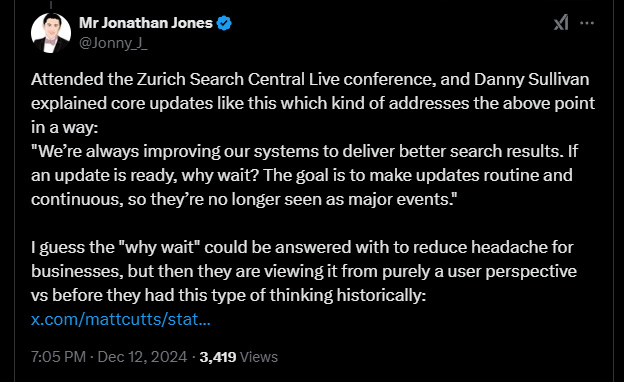

Oh, and Google recently stated at the Search Central Live event in Zurich that they would like it more core updates and more frequent. And Danny Sullivan explained: “…the goal is to make updates routine and continuous so that they are no longer viewed as major events.”

So basically what I just discussed above. 🙂 🙂

Here is one Tweet from Jonathan Jones who visited Search Central Live in Zurich:

Summary: The level of trust determines the amount of collateral damage.

I found Mark Zuckerberg’s comments about building systems to identify harmful content extremely interesting, especially since this process is related to how Google’s search systems work. As Google tries to address quality issues in search, it can develop systems to address these issues. Then Google needs to determine the optimal confidence level for the classifier to determine how big the problem is and how much collateral damage there is. It’s a difficult situation and clearly poses major problems for website owners who are unfairly caught in the crosshairs.

I hope you found the video and this post helpful. Here too, Mark Zuckerberg provided a succinct explanation of how classifiers work. Now just swap “social” for “search” and he could be talking about any major Google algorithm update.

GG