I had the privilege of being invited to Google I/O 2025. And young what an event!

Google said that the AI mode is the beginning of a new era, whereby the search with Gemini 2.5 is transformed in the core. The search changes dramatically.

I met Google CEO Sundar Pichai and sat together in a Fireside chat with deep mind -CEO Demis Hassabis and Sergey Brin. In my next article and in my more about this lecture YouTube channel.

In this article we will talk about the AI mode and other incredibly interesting changes that come to search, including live search, personal context, Gemini in Chrome, agent search and some wild E -Commer changes.

We have a lot to learn!

AI mode

Google called the AI mode a total reinterpretation of the search. It is “the search transformed with Gemini 2.5 in the core.”

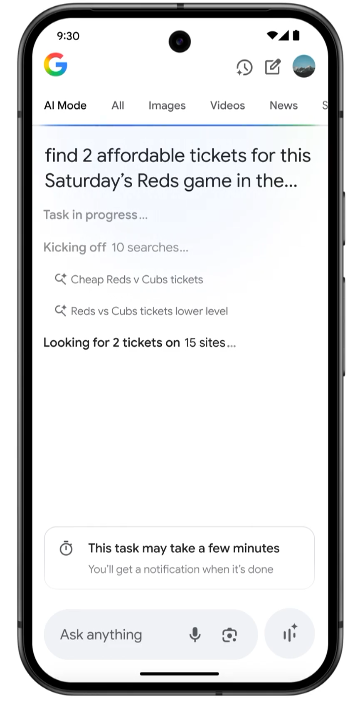

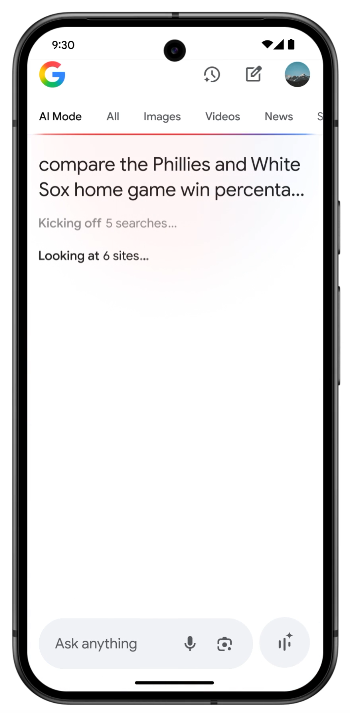

Important to know: AI mode uses A Query technology This means that it divides a query into subtopics, searches for each and then a AI is generated. At the moment we are only we and users have to click on a tab or button to access it. Users can ask Follow -up questions. The AI mode uses Gemini 2.5, the most capable model from Google.

The AI mode is different from conventional search.

Take a look at this search in AI mode. You can see that it means: “Start 1 Search” and that it has searched 59 websites to generate this answer. It is extremely comprehensive. Not all 59 websites are linked. Only 4 are in the carousel.

The AI mode is currently not specified in GSC, but it sounds like we are getting a kind of reporting about it. At the moment visits from AI mode are sent as a “no-referrer” John Mueller says this is a mistake. We will get an indication of how we appear in AI mode in GSC.

Marie’s thoughts: There is a good probability that the AI mode will replace the traditional search. I think that was Google’s goal from the start. We have to thoroughly examine how it decides which websites should be displayed. We also have to consider that people will see personalized results in AI mode over time (more shortly), so that the persecution of their presence will be difficult here.

I found it encouraging that Google said that the AI mode aims to show them: “Links to content and creators that you may not have discovered so far.” I believe that, without obvious brand recognition, really original and insightful content can be appeared here.

Additional learning:

Expansion of AI overviews and introduction of AI mode – Google blog.

The AI mode evaluates websites differently than conventional search. It uses a “query fan-out” technique. Marie Haynes.

Study what the guidelines for quality raters say about the reputation and awareness of your topics. This is probably a key component for ranking in AI mode.

Google instructions for creating helpful content.

Gemini life

With Gemini Live you can refer your telephone or KI glasses or XR (extended reality) headset to everything, ask questions and search for AI mode.

Important to know: Gemini Live has the potential to radically change our search. It comes this summer to search.

Marie’s thoughts: I put “Gemini Live” on i/o. I showed the camera of my phone on a display provided by Google with elements on a bookshelf. The Google suggested that I was asking: “Which of these books would be a good summer reading?” She said the system would probably first look for “What is a summer reading” and then use the camera to see which books were on the shelf, search for information about them and then put together an answer for me. Apparently I should read “quickly and slowly” this summer!

Live search with Gemini Live changes radically how we can search for information.

In Google’s blog post on Good perform well in AI experience in the search They emphasized with high -quality pictures and videos in our content. I would encourage you to check whether the AI mode of sites is located to check whether you can find evidence that you use unique and helpful multimodal content.

Additional learning:

Demos from Google via Gemini Live

Top ways to ensure that your content works well in search of Google’s AI experience

Mike King found, which is probably a patent behind the AI mode. I can’t wait to get involved

Gemini in glasses and XR (extended reality)

I had to affect the glasses at I/O demo. So very, very cool.

We were not allowed to record video, but here I am with the prototype of the glasses. When they come onto the market, Gentle Monster and Warby Parker are made so that they look much nicer.

Gemini talks to them about the arms of the glasses and you also get a display that is displayed in your glasses. It is super intuitive. I didn’t see it at first and then when I focused my eyes again, it was super clear and easy to read.

I need prescription lenses, but the Astra team project was able to take my glasses, scan them and then insert a clear use in the prototype. Very cool.

After all, you can display Gemini Google Maps for you and give you the instructions on the floor.

Incidentally, the first Google person with whom I spoke to the I/O worked on cards. We had an interesting conversation. Iykyk. 😛

I don’t know how to prepare for this new search, except that we may be working on having good pictures on our websites and thinking about the new types of questions that people will ask. Mark Zuckerberg from Meta predicted this Glasses replace mobile phones. Maybe one day we am amazed at how we all walk around and stared at a small device in our hands.

Personal context

The personal context in AI mode offers personalized suggestions based on your previous search queries, Google Mail and other Google products. This only applies to users.

For example, if you have to do with friends in Nashville this weekend, we are big gourmets that music like “. The AI mode can show you restaurants with outdoor seating, which are based on your former restaurant bookings and seekers.

Important to know: Personalized searches make it difficult to pursue keywords. My search results differ from theirs.

Marie’s thoughts: When I heard about a personalized context, I immediately thought of this line from Google from Google Helpful content documentation: “Is this the type of page you want to share or recommend with a bookmark?” It is becoming increasingly important to develop a loyal followers and create things that make people visit their website again as this is so helpful. We do not yet know to what extent the personalized context is used for the design of Google’s search results.

Additional learning:

Personal context in AI mode for tailor -made results. Google Blog.

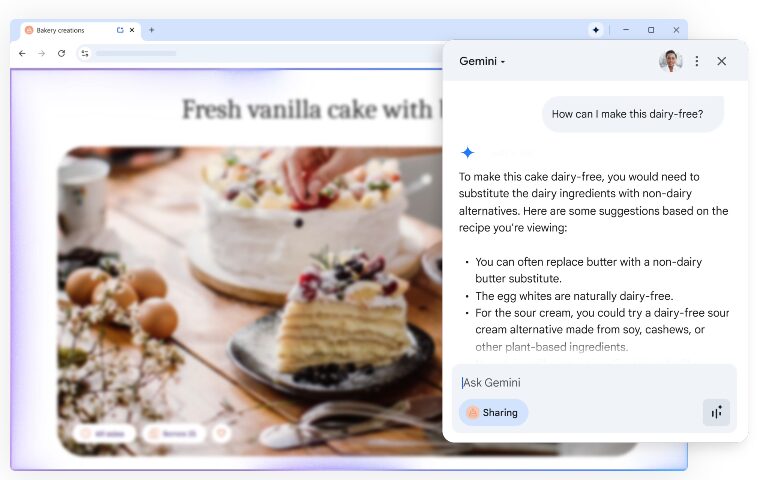

Gemini in chrome

Gemini will soon be available for each page in the Chrome browser. You can use it to summarize pages and more.

(Thanks Glenn for the above picture)

Important to know. With Gemini in Chrome you can turn any content into something personal for you. The obvious use of Gemini in Chrome is that it summarizes content. You can use Gemini Live to talk to Gemini about any page on which you are on. Google gave the following examples:

- On one page about ideas for family games you can use Gemini to ask: “Which of these games are best suited for large groups?”

- You can ask Gemini to simplify a complicated topic that is discussed in an article.

- You can ask for changes to recipes.

- Let Gemini summarize reviews on one page.

- You can ask Gemini to create a practice quiz for the content you displayed.

Gemini in Chrome will perform English Chrome users under Windows and MacOS this week. Google says: “In the future, Gemini will be able to adopt several tabs and navigate websites.”

Marie’s thoughts: I don’t know if we will report on whether or how people use Gemini in Chrome with our websites. I have the feeling that this will change the way we absorb content and probably change the way we write it. For example, if I write a contribution about the assessment of a traffic waste, I can enter suggestions for those who have Gemini in Chrome, which I should ask, such as:Ask Gemini in Chrome to adapt this method for your website.“”

Additional learning:

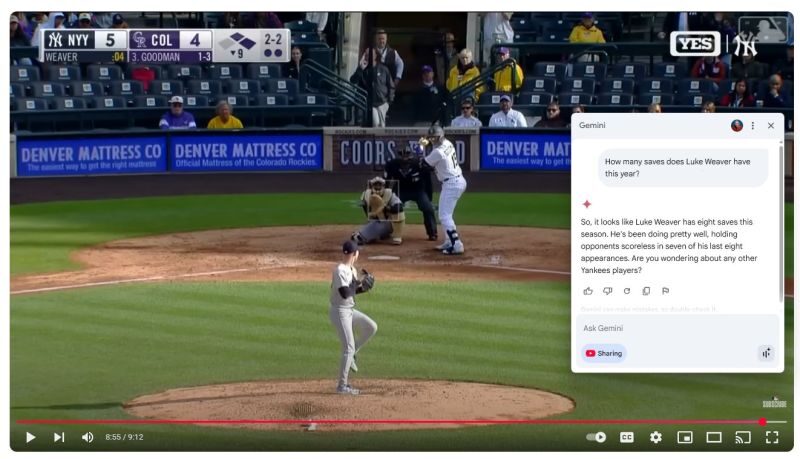

Project wall / agent mode

Project Mariner uses AI to do tasks for you in your browser. That is a big deal.

Important to know. Project Mariner will do tasks for you in up to ten browser windows. It will have a function called “teaching and repetition”. It is currently only available in the United States for subscribers to the new Ultra plan of $ 249.00, but will be available in a broader sense this summer.

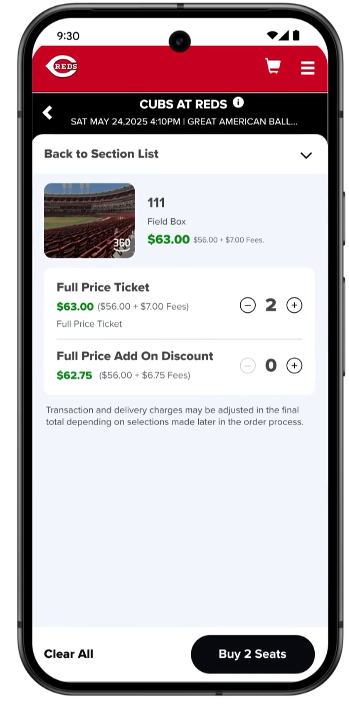

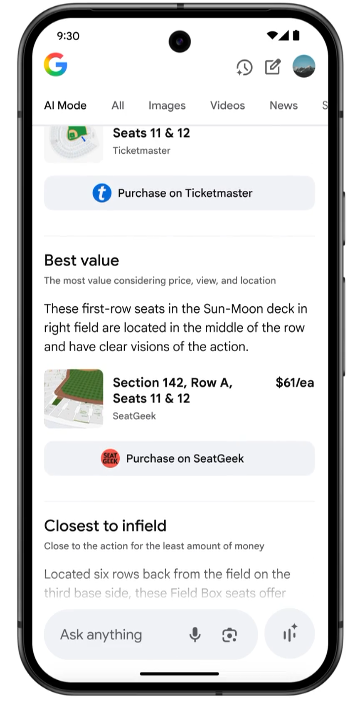

Some functions of Project Mariner are available in AI mode in “Agent mode”. The specified example was the purchase of tickets for a sports event.

We will first see this for sporting events, restaurant reservations and local appointments. Google works with companies such as Ticketmaster, Stubhub, Resy and Vagaro. They did not share how other providers can be added as sources from which this agent could come.

Marie’s thoughts: I brought Project Mariner on Google I/O and Boy has a lot of potential. The Google representative agreed that I should be able to do what I want to do with it: take mine Newsletter and publish it on my website, then go to Convertkit and prepare an e -mail for me to send it out, go to my social media profiles and share it. He said that I can essentially teach Project Mariner for me. We will be able to teach it “repeatable tasks” so that it can remember and do it if necessary.

I can imagine that Project Mariner will be incredibly useful for customer work. I wonder if it is done in AI mode search queries and reported on “rankings” for us.

The agent mode uses MCP (model context protocol) to access agents on the web. Here is a bunch More about MCP in the search bar.

I assume that our personnel agents will find more at some point than we do. Here is more about how Google’s Agent2Agent protocol is set so that the functioning of the website is changed radically.

Additional reading:

Agent skills to do the work for you. Google Blog.

Google brings an “agent mode” into the Gemini app. The verge.

Custom diagrams and graphics to visualize data

In this AI mode function, complex data records are analyzed and graphics are created for you.

Important to know. You can ask questions in AI mode, and Google uses KI to bring data together from several sources and generate visualizations for you.

Marie’s thoughts: These custom visualizations look very helpful for seekers, but I fear that they will be harmful to many websites. For example, let’s assume that I am looking for the best credit cards for myself or the best player who inserts my QB spot for Fantasy Football. Usually these search queries would end on websites. Now I have very helpful information in the search results, collected for me, created in diagram form and made available to me to ask questions.

I can see that this is exceptionally helpful in combination with personalized results.

Additional reading:

Custom diagrams and graphics to visualize data. Google Blog.

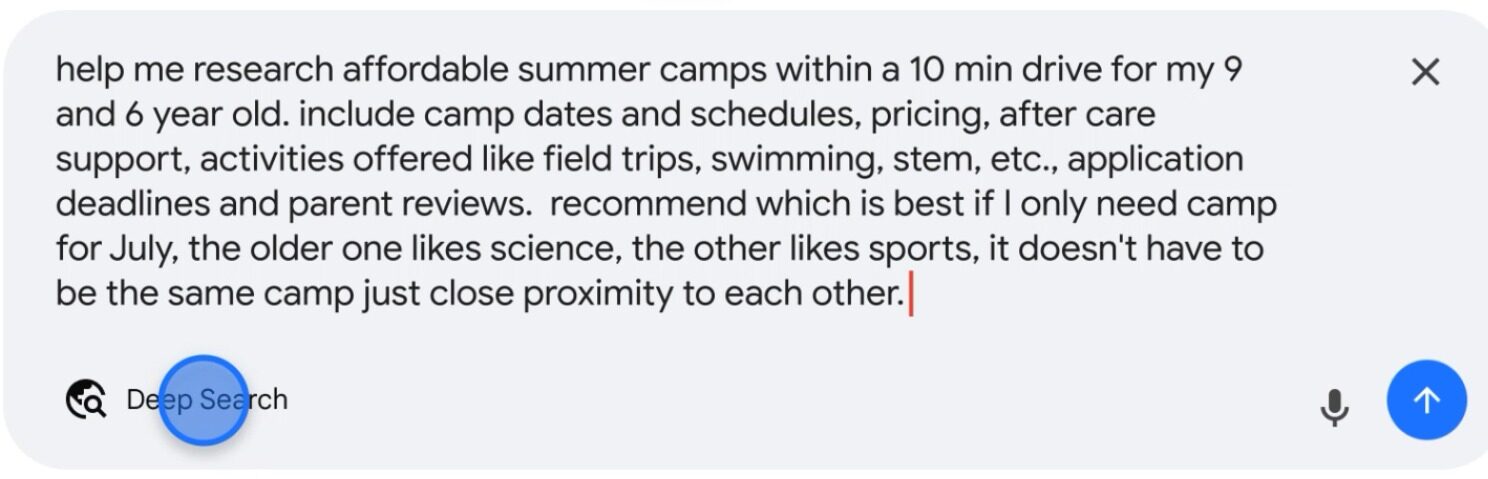

Deep search in AI mode

You have probably heard of deep research on the paid products for Chatgpt and Gemini. Soon a similar product called Deep Search will come to AI mode in the search results.

Important to know: Deep Search uses the same query fan-out technique as AI mode, but brings it to the next level. It can spend hundreds of search queries, create reason in different information and a reported report at expert level in just a few minutes. This sounds really very similar to Deep Research, except that it is available in AI mode in the search.

Marie’s thoughts:

Deep research in both Gemini and Chatgpt is extremely useful. I use it for personal search I want to do this thing To give me an insight into how it works and what I can invoice ”or for customer search such as“ How ”Explore the call from (customer). What are you known for? What potential reputation concerns do you have and how can you improve? “

In some cases I think that it will be good to be mentioned in the deep search. When I deeply research how to make the worm tea from the worm cast from our compost, I read every location with which Gemini’s deep research is connected. In many cases, however, I think that seekers will not click through.

Additional learning:

Deep search in AI mode to explain to you when researching research. Google Blog.

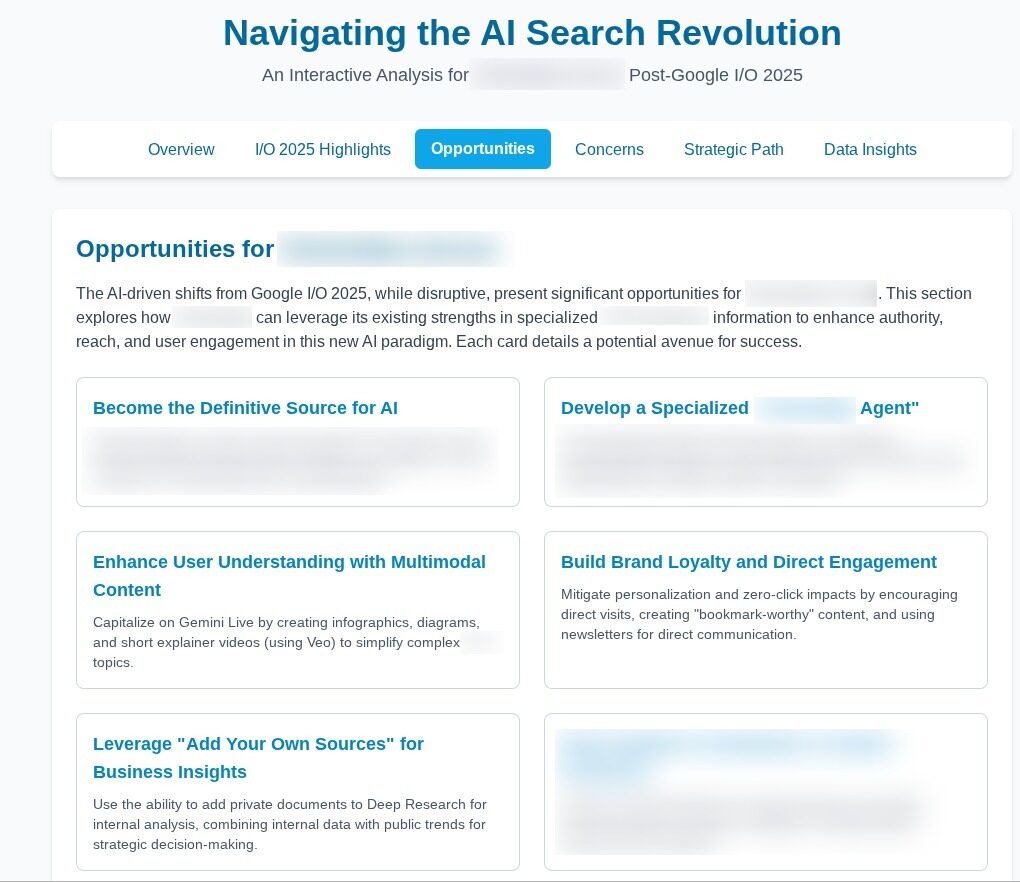

Add your own sources into deep research

Gemini’s deep research is so good. Now you can add your own Google Drive documents to a deep research report.

Important to know: You can now create adapted deep research reports with deep research work that combine public data with your own private PDFs, documents and images. After all, we can also access information from Gmail.

Marie’s thoughts: There is so much that we can do with it! Google suggests that we take internal sales figures in PDFs and ask deep research into the trends of the public market. Or an academic can strive to find magazine articles to enrich her literature research.

After I wrote this article, I gave him Gemini together with a few monthly reports for one of my customers. I then asked for a deep research report that is specific to this customer to help you how the changes in I/A could affect you. It made a thorough document that was a little detailed. I have to experiment with better entries. And then it suggested that we remove an interactive website from this data. It is now on a special URL on my website that this customer can see. I also created a podcast with one click to explain the changes to this customer.

This was done with a quick prompt. I will refine this and create even better customer reports from it.

As soon as we investigate deeply in Mail Mail, I can see all possible options.

Additional learning:

Get deeper findings: Now add your own sources to deep research.

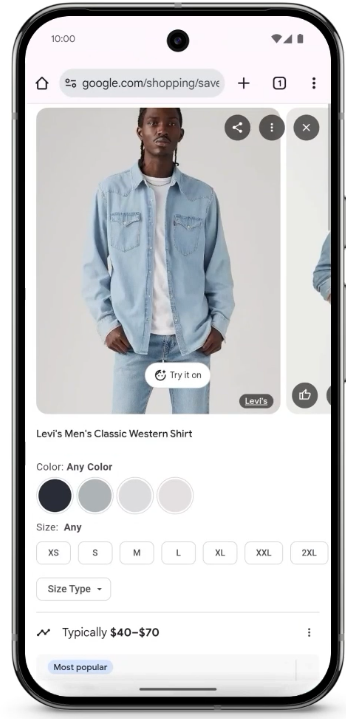

Try it out

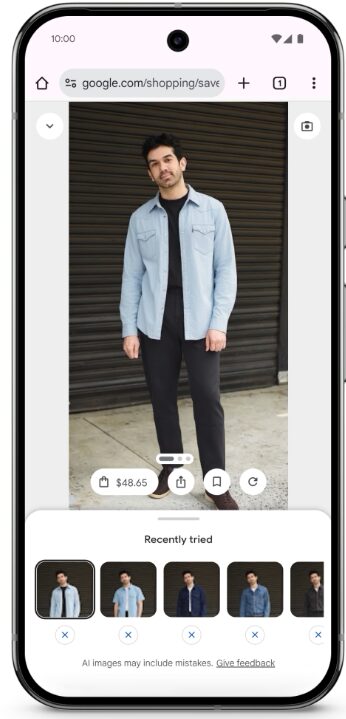

With Google’s new attempt, it will be in the way we buy clothes, change and encourage more people to buy online.

Important to know: You will be able to give a picture of your camera from your camera role and show you what the clothing of a website looks like on you.

This function is supplied by the shopping diagram (Google Knowledge Graph for Products) and a tailor -made model generation model that understands the human body and the shades of clothing.

You can test this function in Search laboratories. It is currently available in the USA. The service is available to every dealer with a shopping feed that is entitled to display free offers.

Additional reading:

Learn here. Google Blog.

If you are a clothing dealer, read the requirements Here.

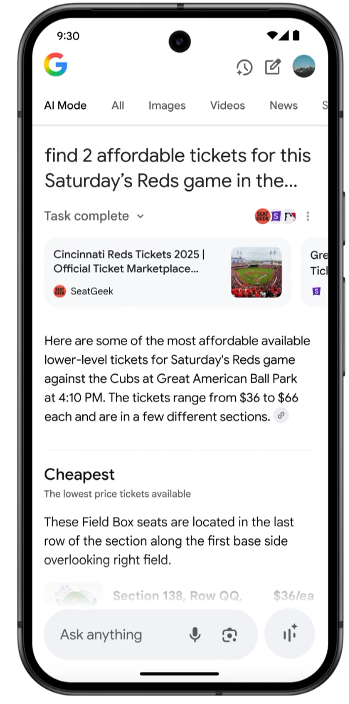

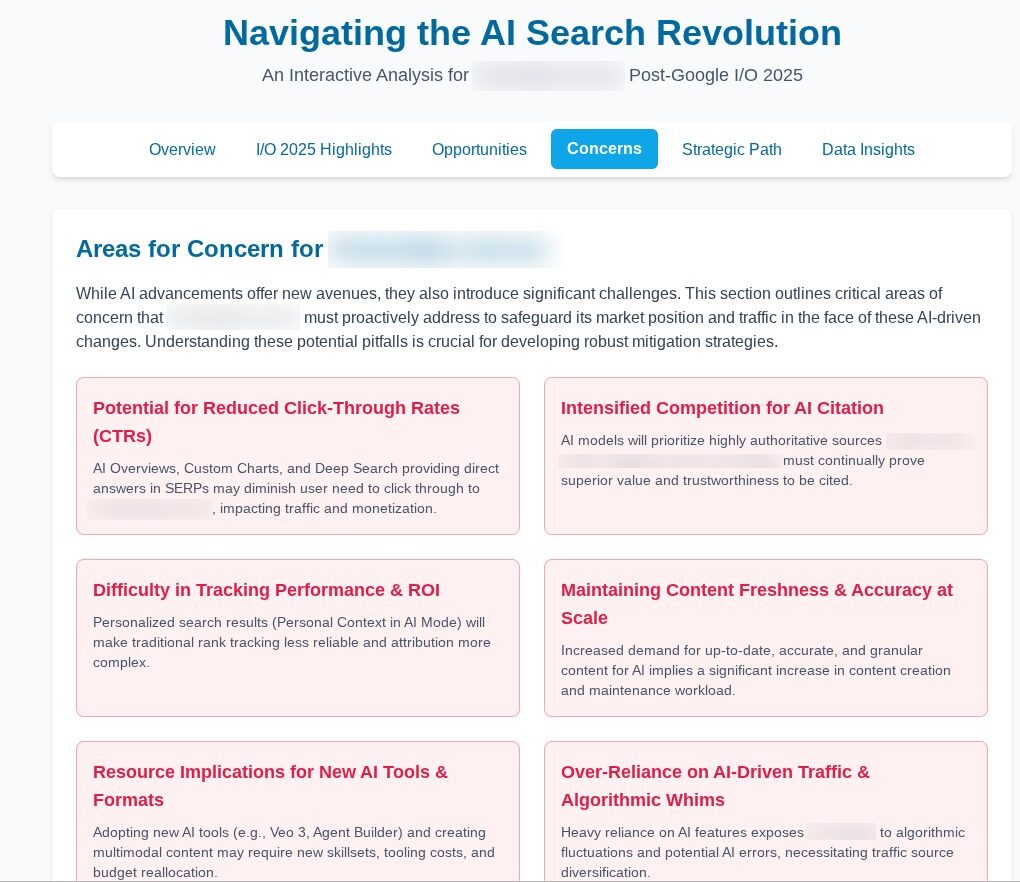

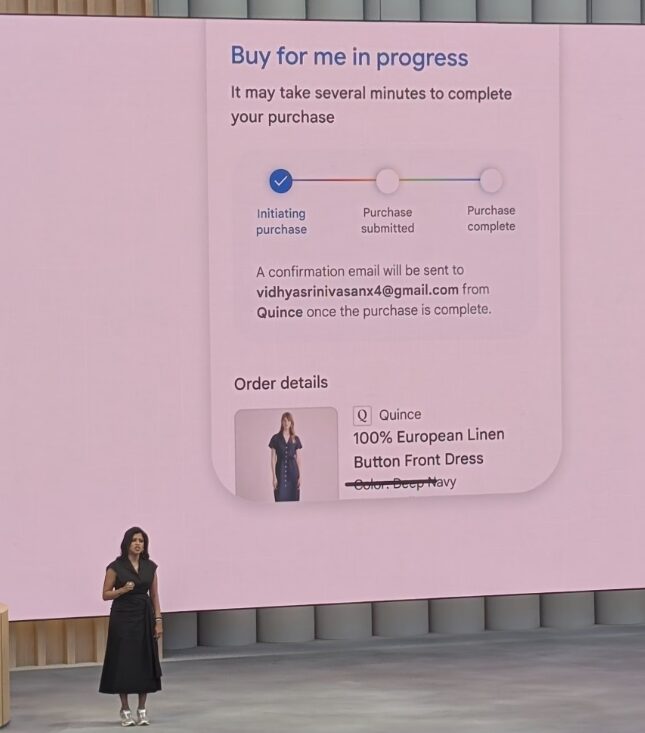

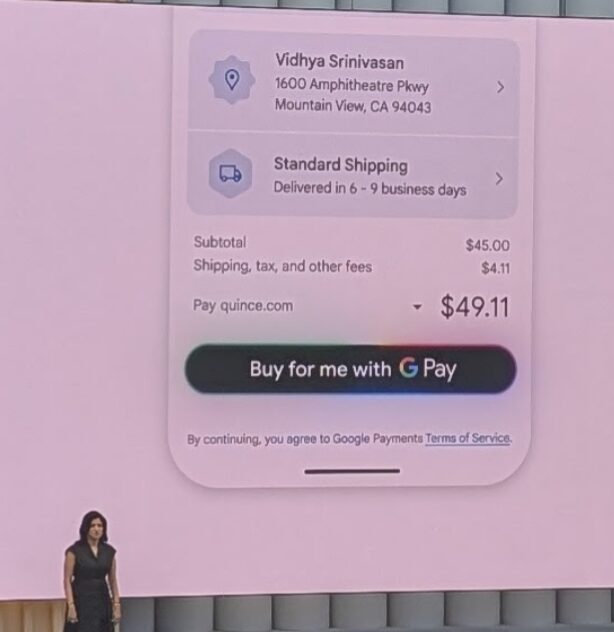

Agentic Checkout: Buy for me with Google Pay + Price tracking for me

Woh. This has the potential to change the way people buy online.

Important to know. Google performed “Agentic Checkout”. As soon as you have found a product you want to buy, you can tap on any product list to “Persection Prize”. You can determine the desired size, color or options, and your purchasing agent will keep an eye on the product for price drop notifications.

In this case, Google adds the item to your shopping cart and check in your name with Google Pay.

This will lead to product lists in the USA in the coming months.

Marie’s thoughts: There are significant effects on this function. Traders will compete on the price. I assume is that a good thing for buyers?

Many of the things we optimize as SEOS, e.g. For example, improving forms, optimizing the addition to the shopping cart and more means very little in comparison to simple products at the lowest price with the best guidelines for shipping and refunds.

I can imagine that this function is very popular in combination with live search. Hey Gemini … What is this mistake on my plant. Hey Marie … looks like you have aphids. Do you want me to find a good product for you? Gemini buys for me and selects the product that is a good price with the fastest shipping. And I didn’t even touch my computer or phone. Speaking of … let’s talk about wings.

Wing delivery

Google only mentioned “wing“In the discussion how they helped Walmart, to deliver supplies for disaster aid. However, it is not difficult to imagine that one day we could have a delivery over wings at home. At the I/OI we had a” cake in the sky “, a delicious fruit junction that was delivered via the event about drone. Sausage. We live in the future.

Google’s video, Veois incredible. With VEO 3 you can now vote and add sound effects. You can also use Google Lyria Add unique music.

Important to know: VEO 3 is almost good enough to convince people that it is real. We appear at an age when it will be difficult to know what is real! Google introduced FlowA video editor for VEO, but you need a paid Google AI account to use it. At the moment you need the super expensive Ultra plan, which is 250 US dollars/m.

Marie’s thoughts: VEO 3 will revolutionize how content is created online. I took part in a Fireside chat with Demis Hassabis and the filmmaker Darren Aronofsky, in which he shared how veo allowed them to be incredibly creative.

This entire video, including the sound effects and voices, was made with VEO 3.

https://www.youtube.com/watch?v=mcfchyae6p8

Additional learning:

Fire your creativity with new generative media models and tools. Google Blog.

Darren Aronofsky and Demis Hassabis about storytelling in the age of the AI.

New AI plans

Now above the Google Ai Pro plan of USD 19.99 there is a Google AI Ultra plan of USD 249.99. I know that it seems ridiculously expensive. However, Project Mariner is currently only available on Ultra. As soon as I can use Project Mariner, I can easily see that it saves me for many hours a month and that the money is worth.

The Ultra Plan has the highest access to the latest models, for flowing, video generation tools, VEO3 (with sound) and Project Mariner.

The Ultra plan is initially only available in the USA, but other countries will soon be added. I will register as soon as it’s ready in Canada.

Additional reading: https://gemini.google/subscriptions/

Google Beam (Formerly Project Starline) uses AI and light so that people look 3D for video calls. .

We will be able to carry out a living translation With Google Beam and also in Google Meet.

Google showed a new type of model, Gemini diffusion. Instead of generative text and tokens at the same time, it creates exits by refining noise step by step. It is incredible Quick, 5x faster than what we have today. You can use this link Enter the waiting list at.

Jules is a new development agent who combines with her code base and does tasks with direct export to Github. It looks pretty good!

Gemini Pro Deep think is an advanced argumentation model that Gemini 2.5 Pro uses. It uses parallel thinking techniques that lead to an incredible performance.

Google is working on creating a full world model. They say it is a critical step to become a universal AI assistant and that this is their ultimate vision for Gemini.

Lyria 2 Delivers music and professional audio of high fidelity from an input request. Connect the waiting list Here.

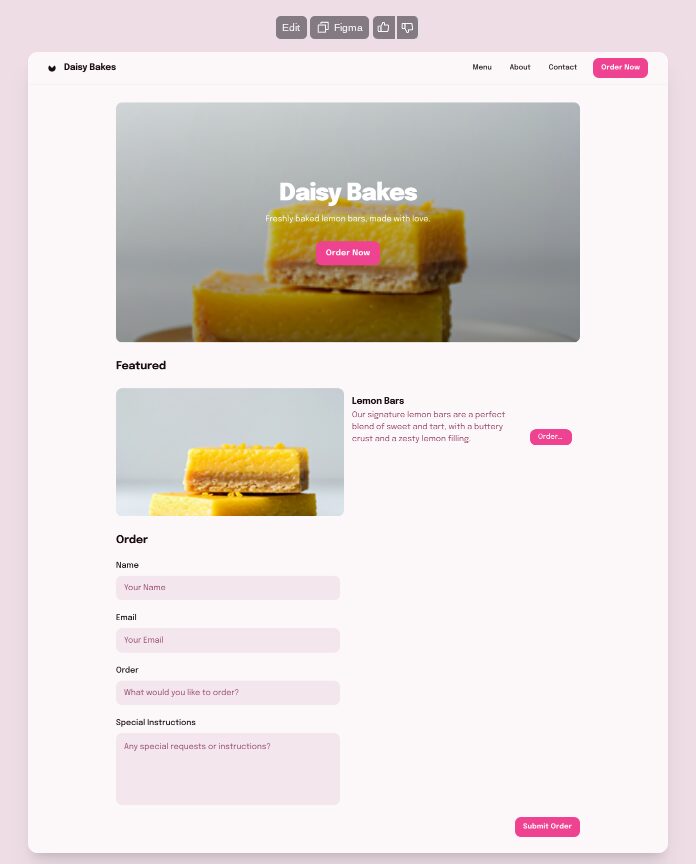

engraving Create an app with a prompt. Once you’re done, you can copy your designs in Figma. I would encourage you to try it out!

It was an honor to be in the same space as Demis Hassabis, co -founder of Google Deepmind, and Sergy Brin, co -founder of Google when they shared their vision for the future. I will create more content about what I learned from your conversation.

I wanted to end this blog post with a link to Google’s blog post about your vision for Building a universal AI assistant. This new era in which we have entered this week leads us to Google’s ultimate goal of becoming a universal assistant.

Google is not alone in this company. This week, too, Openai announced a partnership with a company entitled … Can you believe it? Jony Ive, the man who has designed the iPhone and the MacBook Pro, will work with Sam Altman and team to create new devices that completely reinterpret the way we use computers and become our personal companions and assistants:

https://www.youtube.com/watch?v=W09BIPC_3MS

We have some interesting times ahead of you!

Marie

Exercises for search bar Pro members

This week there is a special edition of Maries notes With exercises that help you learn new skills that we need when AI changes the search:

- Study the AI mode (including a table, instructions and input requests)

- Prepare for the live search

- Prepare for the personalized search

- Experiment with Deep Research

- Play with Veo

- Excavation into the patent of AI mode discovered by Mike King

If you are serious to learn AI while changing the world, we want you to do it Accompany us in the Searchable per area! Your membership of 42 USD/M (480 US dollars per year) contains tips, strategies and requests from me, Maries notes, Community discussion, tutorials and twice a month looking for happy hour meetings with me, while we help each other to learn with AI and be successful.

Do you have any questions?

Visit me on Thursday, May 29, 2025 at 12 p.m. Live podcast episode Where we talk about all the things that are discussed in this article.