Four methods and only two are correct. In this article I will be the various options, as I have seen that site owners have tried to block content that against the guidelines for the reputations abuse of site reputation against the guidelines of site (including the actual examples of functionality these methods) or not).

Last year I wrote a contribution in which it was explained why an algorithmic approach to the treatment of reputation abuse on the website was the way for Google (compared to the use of manual actions). Dealing with the situation of algorithmic would obviously be a much more scalable approach, Google would pursue more content against the guideline, and the web spam team would not make one-off decisions about which websites should receive a manual action.

Fast lead to this day and Google still uses manual actions to deal with the reputation abuse of site, and some websites are still slipping through the cracks. In addition, there are some websites that edit the blocking of content not correctly from a technical SEO view. For example, you know that you have content that violates Google’s guidelines for misuse guidelines, but do not properly block this content. And that means that the content can still rank that it could lead to a manual action, etc.

In the past few months I have seen enough strange technical SEO situations with abuse of reputation from Site that I have decided to write a contribution to eliminate the confusion about the correct approach to blocking content. In the following I will report more about the correct use of the reputation abuse of site from a technical SEO perspective and at the same time give examples of what I see in the wild.

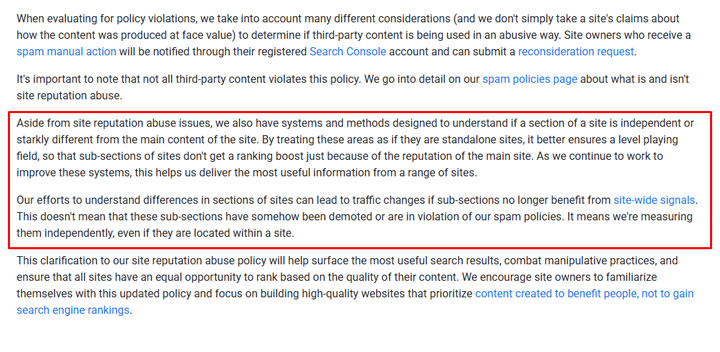

First, a note about Google’s “very different” algorithm:

We know that Google has improved its systems to identify when a content section is “independent or very different” from the main content of the website. I reported in my post with the title “A nightmare in the Affiliate Street”, in which I reported how sections fall in relevant locations, since they no longer benefited from local signals (so that they brought in in rankings).

It would make sense that Google would use these systems as the basis for the processing of reputation abuse abuse abuse abuse. However, Google has explained that we are probably not able to see an algorithmic approach as soon as soon (at least completely). The problem is that Google has to bring these systems up to a point where they do not cause mass damage. From now on, Google manual actions will remove the reputation abuse of site.

For example, Google has just introduced manual actions In Two waves Recently for locations in Europe. Regardless of this, I would put your technical SEO situation in order so that you have no problems with manual actions or an algorithm update that deals with the reputation abuse of site.

Here is information from Google’s blog post Explain more about his “very different” system:

Four ways of working websites process the reputation of Site Reputation and why only two are correct. I will start with the wrong methods:

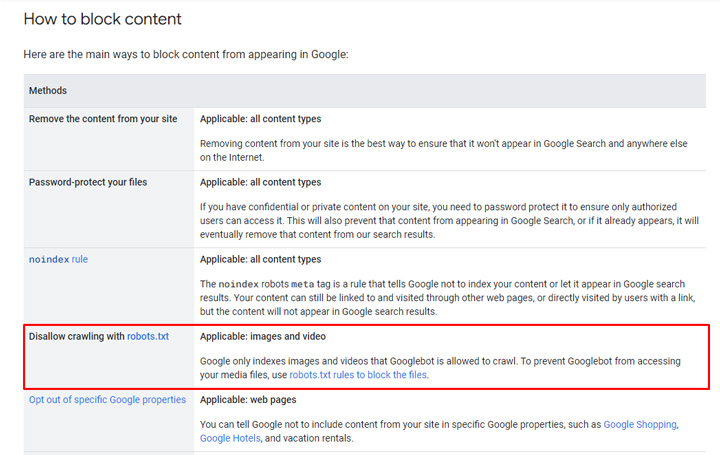

1. Block over robots.txt. Unspecified

First, blocking via Robots.txt is not a valid approach for dealing with a manual action for the reputation abuse of site. I know that this confused many site owners, but not approved pages can still indexed and still rank, but only without Google crawl the content. Even in the documentation of Google about how to deal with content that “block” against the guidelines of reputation abuse guidelines against robots.txt. I also dealt with this in my first article about the reputation abuse of site …

In order to prevent content from being indexed, blocking via robots.txt is only a valid approach for Pictures And videoAnd not HTML documents. Therefore, this is not the right way to cope with content that violates Google’s guidelines of Google’s reputation guidelines.

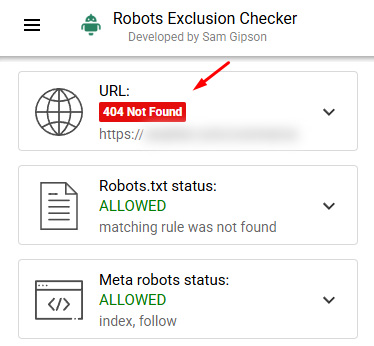

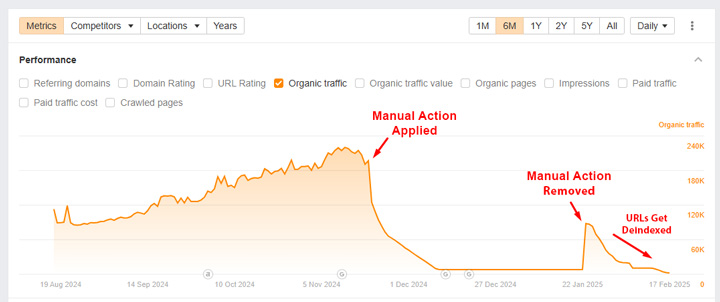

We actually saw this game with the latest manual actions in Europe. The following site somehow got out of the manual action, but both were not in the content AND Block via robots.txt. The problem is that Google cannot crawl the content to see the noindex day. Therefore, Google can continue to indicate the pages, but without crawling without the content. And yes, these pages can still rank.

In the following you can see the visibility tank when the website received manual action, but will then be imposed again if the website does not admit both Robots.txt and the content. I assume that the website had manual action (incorrectly) removed when they did so. And since Google could not crawl the URLs to see the NOindex day, the pages remained indicated, but only without Google crawl the content.

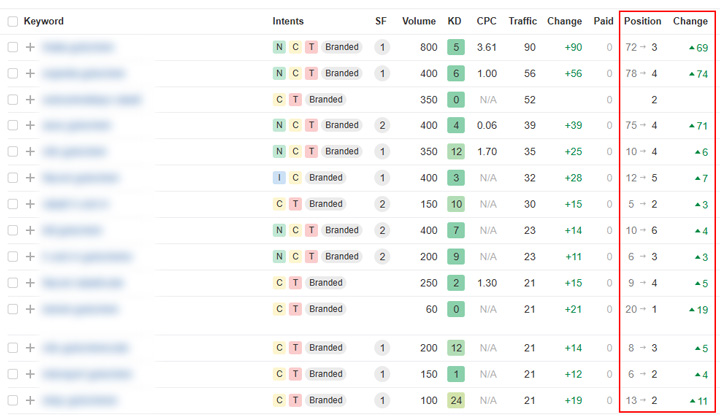

And yes, the sides ranked … and ranked very well. Please note, I am not sure whether this slipped from the web spam team or does not admit this via Robots.txt after The manual action was removed. Nevertheless, the website started to rank very well again.

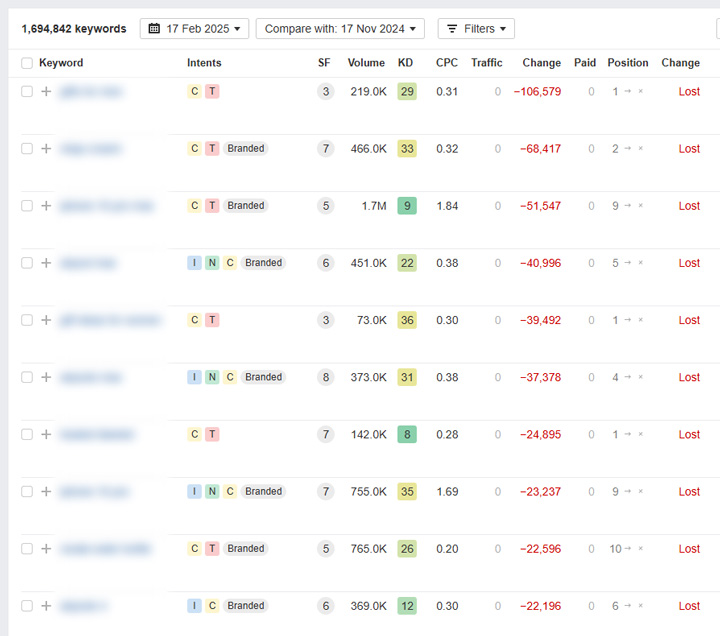

Here, some rankings for the removal of the manual action and the site jumped back to the SERPs, whereby content against the guideline “Reputation of Google” violated the guidelines of Google’s reputation:

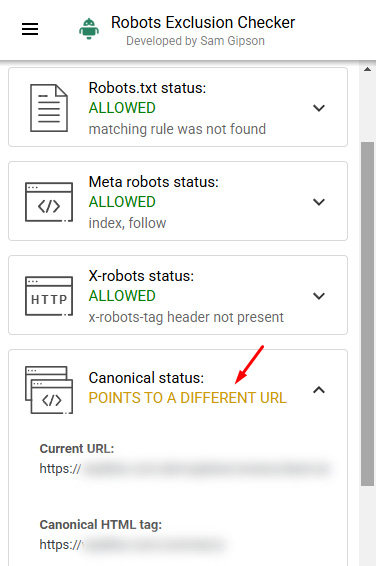

2 .. not valid

No, that’s not a good idea. Google also does not recommend that the website have to be misused of reputation. However, there are some websites, the URLs that violate the top level directory for this content or for other URLs, or other URLs canonically. I think your idea is that Google follows the canonical hint and that not all contents of the reputation abuse of site will index.

But it is important to understand that rel -canononic is only one Noticeand not a GuidelineIn this way, Google can ignore the canonical ignition selected by the user and index the URLs. And if these URLs are indicated, you can maneuver.

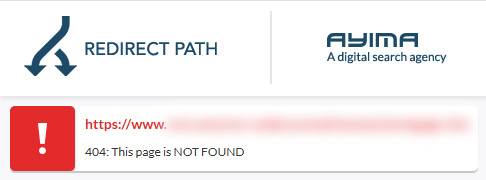

For example, here you will find a large and powerful website that has a list of content in which Google violates the guideline “Reputation”. another Directory (the root -URL of this directory) … but this root -URL (the canonical side) is 404.

And the side that these URLs are all at 404S:

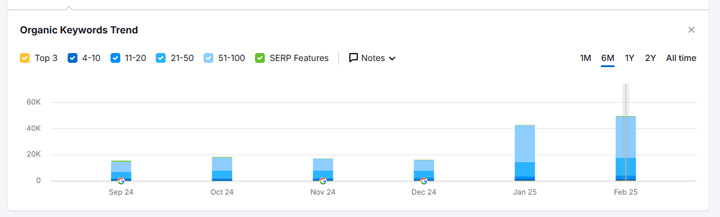

And Google indexed URLS in the directory and the folder for over 50,000 queries. This too is that not The right way to cope with the reputation abuse of site.

Now a correct method of dealing with content that violates the content of the “Reputation of Google Reputation Reputation” against content:

3 .. NOindexing of the content. VALID

If you are not able to do the content, Google will set the URLs and then remove it from the index. If you are removed from the index, you cannot maneuver. Mission fulfilled.

Noindex is a Guideline and not a Notice. You don’t have to worry that Google decides what to do. The pages are removed from the index as soon as it sets the URL and sees the noindex day. And again you shouldn’t block URL near Robots.txt. As I have already explained, this can lead to the URLs still indicated, but only to crawl the content without Google. This will not happen if you are just unable to do the content, since Google can crawl the URLs and record the NOindex day.

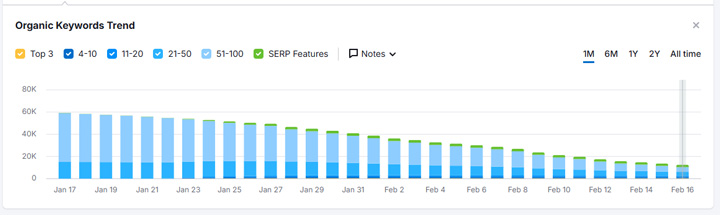

Here is an example of a website correct, which is registered in a directory that violates the guideline of Google’s reputation of Google’s guidelines. Action was not installed. Owls pages, they were removed from the index and the visibility falls strongly for the directory.

This is the right approach.

Here are the number of keywords in which the section was classified over time, since Google keeps the URLs and the NOindex day (removed after manual action):

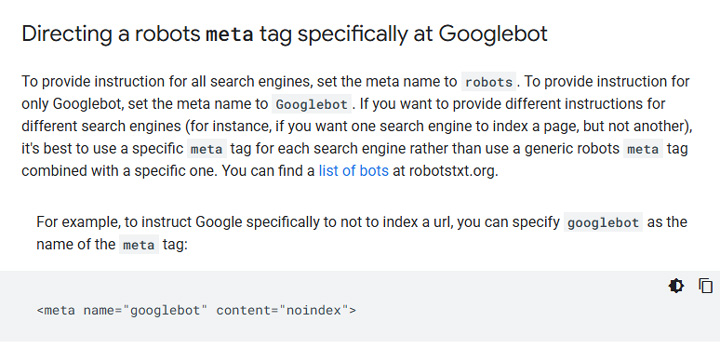

A note on the specific blocking of the user-agent blocking:

In addition, you can always easily the URLs for Google if you want. For example, if you still want the contents to be classified in Bing or other search engines, you can simply have noindex for Googlebot.

Here you will find information about blocking Googlebot about the Meta Robota Day:

4. Nukking of the content completely. 404s or 410s. VALID

I won’t spend much time to cover this part. It is simple … If you completely remove the content or directory, the content can obviously not manage. Mission fulfilled. Set a review requirement and continue.

But I wanted to point out that this is a big hammer and there are other sources of traffic beyond Google. For this reason, some websites for Noindex only choose Google and not for other engines. For example, you can use advertising to lead social media into these sections or give internal views for the action for these sections as soon as users are already on their website. I don’t say that you should do this, but it is definitely an option for publishers.

Therefore, it is definitely a valid approach to completely remove the content over 404s or 410s, but for some websites this may not be the biggest sense.

Here is a website that has agreed to the entire content in a directory that violates Google’s “Reputation” guideline:

And you can see the massive decline in rankings over time when Google recorded the 404s. Here, too, it is a big hammer, but a valid approach:

Summary: You know the right approach for the reputation abuse of site.

I hope this post wiped out all the confusion that you may have over the best approach to dealing with content that violates Google’s guidelines of Google’s reputation. If you have to block the content, make sure you do it right. And that means not to integrate the content or remove it completely. There are no valid methods about robots.txt or canonicalization of the content. Both methods can cause the content to still be indicated and classified in the SERPS. And if Google sees this, you won’t remove your manual action or it can be used again. Good luck.

Gg