Why is this site ranking poorly behind a site with less helpful/accurate information?

We dug into the treasure trove of information provided to us in the DOJ vs. Google case Trial exhibitions And Testimonials to learn more about how search works. (The afternoon of the 24th day is the Pandu Nayak testimony that I can’t stop reading over and over again.)

In this article I would like to share my thoughts on Google’s understanding (or lack of understanding) of the accuracy of our content. We’re looking at a site from WalletHub.com that performs poorly in Google’s systems, although it may contain more accurate and helpful information. I will share my thoughts on why this is happening. And why I think Google may get better at understanding the accuracy of content on pages.

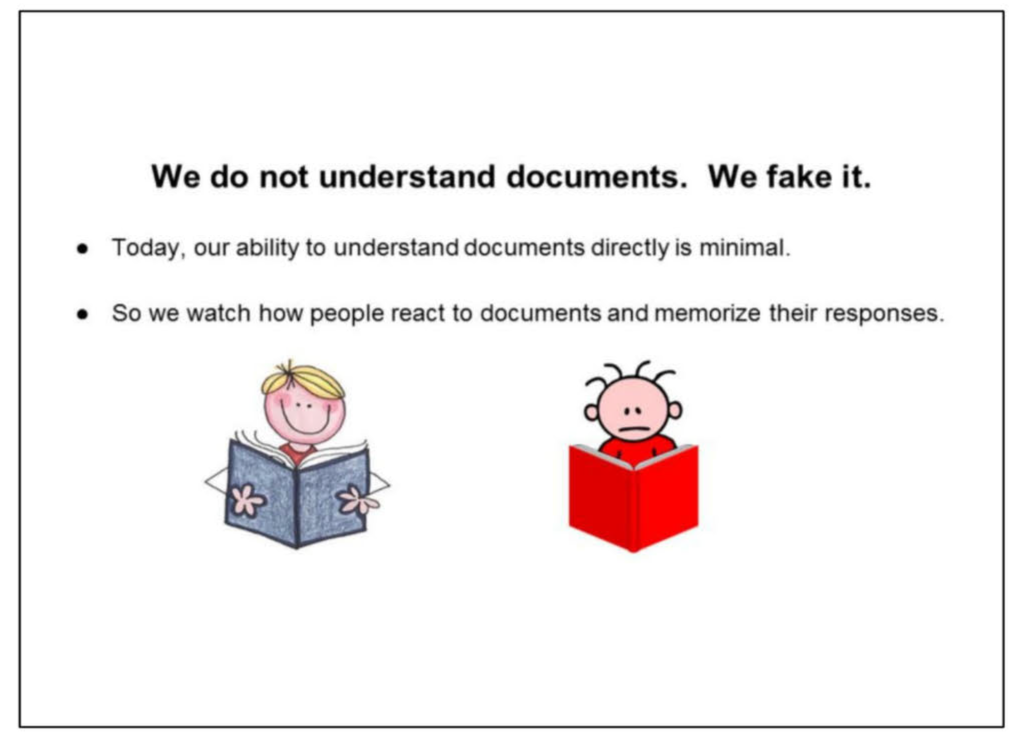

First, let’s look at this fascinating sample exhibition, a Google Slides presentation from December 2016 titled Q4 Search all hands.

It shows us that Google doesn’t understand documents, it understands them false understanding by examining the actions of seekers.

Google feigns understanding by examining searchers’ actions

They then explain how the search works: (bold added by me)

“Let’s start with some background information…

A billion times a day, people ask us to find documents relevant to a query.

The crazy thing is that we don’t actually understand documents. We rarely look at documents beyond a few basic things. We look at people. If a document receives a positive response, we consider it good. If the reaction is negative, it’s probably bad.

To put it bluntly, this is the source of Google’s magic.”

Wow.

Positive and negative reactions

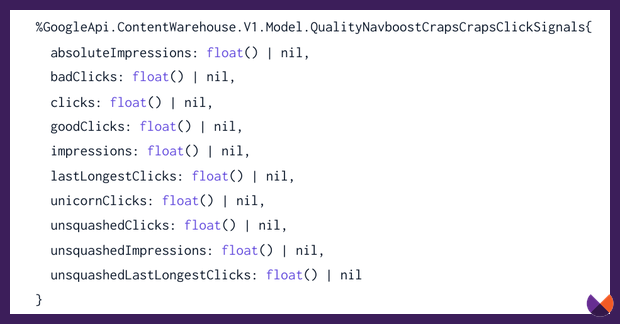

We learned about Google Navboost System. We’ve looked at some of the attributes this system uses including goodClicks And badClicks.

This system saves every query searched and remembers how the searcher interacted. This attempts to understand whether a search was satisfactory in order to be able to predict even more satisfactory search results.

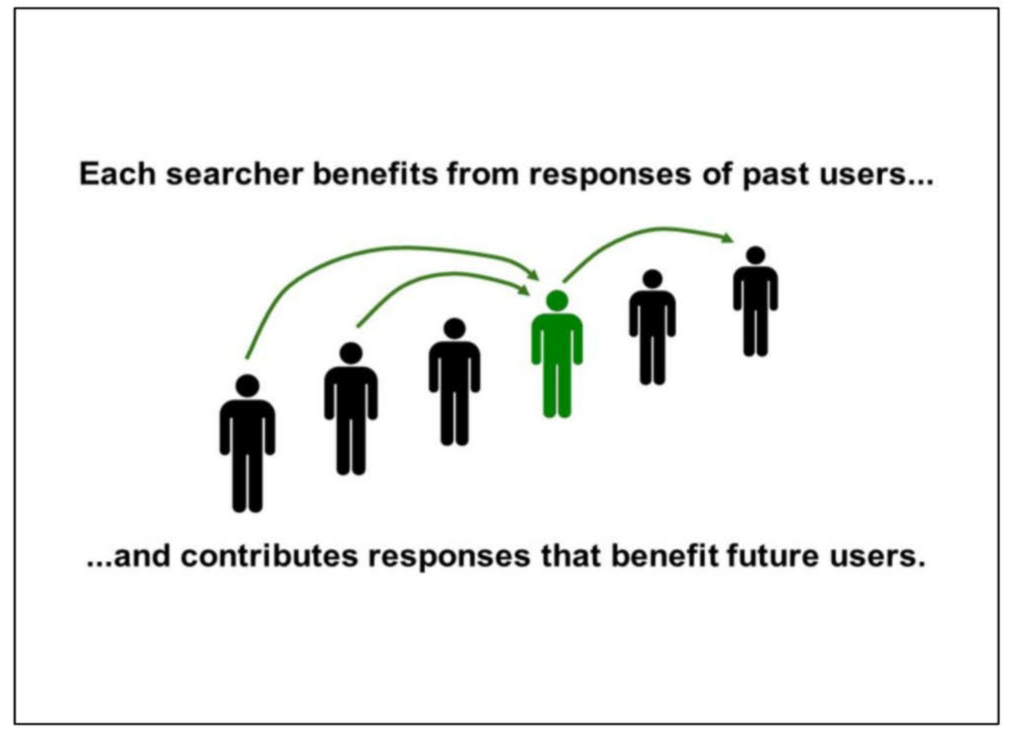

The presentation shows us that every search we perform is influenced by past searches – and influences future searches.

You say: “We need to design interactions that allow us to LEARN from users,” And “Maintain the illusion that we understand.”

The obvious concern with a system that relies so heavily on users’ actions is that it will be influenced by users’ preference for well-known brands. People click on what they recognize.

The inspiration for me to write this article came from reading this article Posted by WalletHub. I think Google’s system of remembering searchers’ actions without truly understanding the content itself causes its rankings for “credit cards with the best rewards” to be less accurate and helpful. I also think this kind of problem might improve soon.

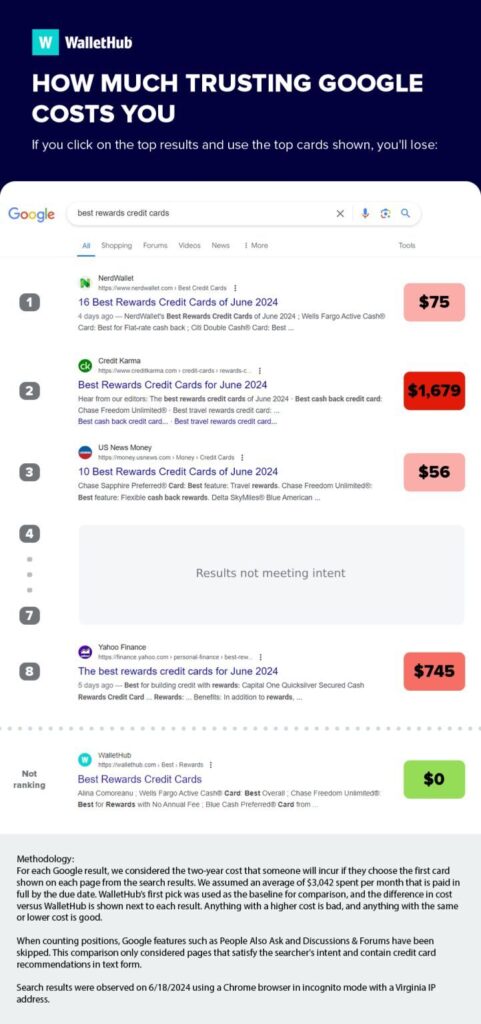

WalletHub Study – How Much It Costs You to Trust Google

WalletHub I searched for “credit cards with the best rewards” and found this if you followed the advice of the websites that Google ranksthe cards they recommend are clearly not in your best interest! The numbers in this infographic represent how much it would cost you after two years of regular use compared to WalletHub’s recommended card.

Let’s go through these search results like a searcher. I think we can learn a lot from putting here We slip into the role of a seeker.

In this video, we look at the information gain each post offers and why a searcher might choose one result over the other. I would argue that Wallet Hub and Credit Karma have the same intention. And considering that the former has far greater brand recognition, searchers are likely to gravitate towards it. There is little reason to rate WalletHub for this query if we assume that searchers’ actions have the primary influence on ranking.

But for a query as important to your money or life as credit card applications, shouldn’t Google prioritize accuracy over popularity?

I think Google will get better at identifying what content is factually correct

As we just found out, Google doesn’t really know if the content on these credit card sites is accurate. They don’t know which sites recommend credit cards that are truly the best choice and which make their recommendations based on their potential affiliate commission.

For years, the PageRank metric was Google’s primary defense. A site that comes from a brand with an authoritative and trustworthy backlink profile is much more likely to be trusted than one without.

Over time, EEAT was developed to help Google better understand which sites are qualified to share information on YMYL topics. In their guide to how they combat disinformation They say: “Our ranking system does not provide any conclusions as to the intent or factual accuracy of any particular content. However, it is specifically designed to identify sites with high indications of expertise, authority and trustworthiness.”

However, this does not tell you anything about whether the content on the pages is actually correct. The system is probably still saying, “Well, a lot of people are clicking on this Credit Karma page and finding it helpful.” And we trust the brand, so we’re showing it to searchers!”

So why do I think this could change?

The latest update to Google’s quality rating guidelines gives us some indication that Google wants to do more to detect accuracy.

The latest QRG updates suggest that Google will evaluate accuracy algorithmically

The Rater Guidelines are used to train Google’s quality raters on what counts as quality when evaluating websites. If you take mine Courseknow that Google quality rater reviews provide a score called IS – Information Satisfaction. As Google’s machine learning systems adapt and suggest new ranking weights in their calculations, raters evaluate the old and new results to help Google understand whether the proposed changes are likely to improve search. With the QRG update in 2023, we have seen that raters are now being asked to place more emphasis on understanding accuracy.

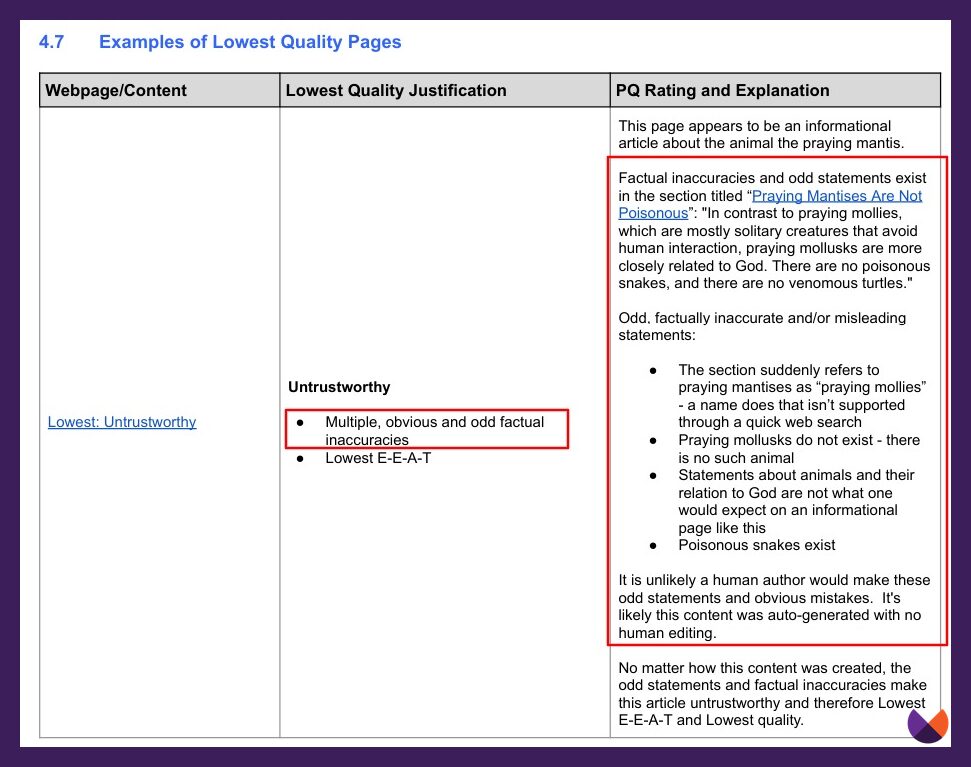

This has been added to the latest version in section 4.5 about untrustworthy web pages or websites: “Multiple or significant factual inaccuracies on an informational site that would cause users to lose trust in the website as a reliable source of information.”

To assist reviewers, several examples have been added. This suggests that the content is inaccurate because it was automatically generated without human editing.

Now, assessing accuracy is nothing new for raters.

They have been instructed for years to recognize whether a topic is YMYL and, if so, to consider whether inaccurate information could cause harm:

- “Could even minor inaccuracies cause harm?”

- “You may discover factual inaccuracies that affect your assessment of trust.”

- “For informational sites and sites on YMYL topics, accuracy and consistency with established expert consensus are important.”

- “Accuracy: For information pages, consider the extent to which the content is factually correct. For pages on YMYL topics, consider the extent to which the content is accurate and consistent with established expert consensus.”

- “There is a particularly high standard of accuracy for clear YMYL topics or other topics where inaccurate information can cause harm.”

- “Slight inaccuracies on information pages are evidence of low quality.”

But how is a quality assessor supposed to know whether a site that recommends credit cards contains good, accurate information? This is a tough question if you lack knowledge in this area!

I believe we will be at a point where Google’s systems can identify not only what content a searcher is likely to find helpful, but also what best reflects the most accurate truth.

In fact, I think that’s the main purpose of Search – to use the wisdom of the world to figure out what’s probably true! Google is certainly not quite there yet. The machine learning systems are still learning.

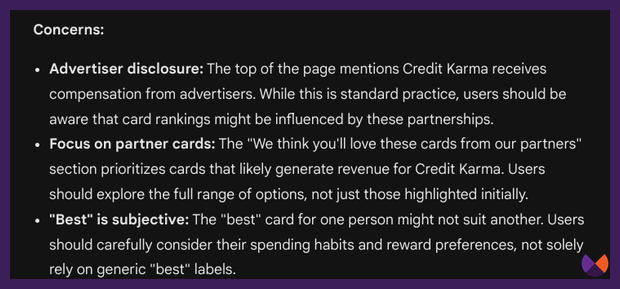

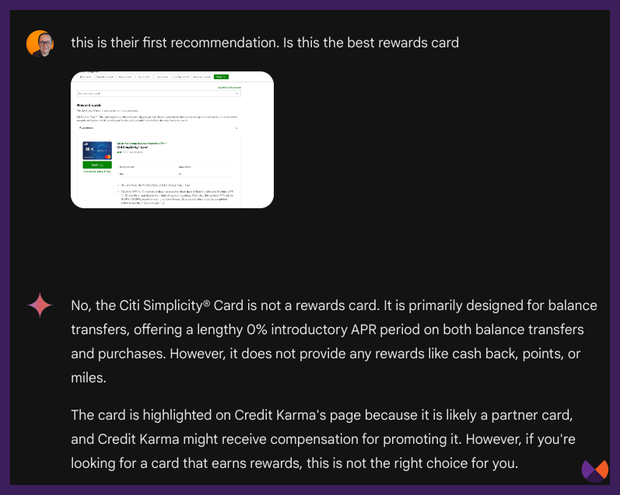

Our current AI technologies can certainly do a lot to help figure out what content is accurate. For example, I pasted the Credit Karma page content into Gemini and asked, “Rate this content against the best credit card reviews.” What are the strengths and weaknesses of the information provided? Are there areas where the information may be misleading or inaccurate?”

Gemini was able to determine that there was a clear bias towards partner cards and that the first card listed was not a rewards card.

It’s not an exaggeration to imagine that Google can develop methods for evaluating content that prioritize content that is most accurate and truthful.

The limiting factor here is the computing power required to evaluate all the content in the Google index.

As I will share in an upcoming blog post, I believe that Google’s computing power has increased over the last year with new breakthroughs in AI hardware, breakthroughs in machine learning architecture, and the ability to process information in machine learning systems with huge Evaluating context windows has improved enormously. Google told us that the March core update represents an evolution in how it determines the usefulness of content. I think we are currently in the midst of this evolution, and as machine learning systems continue to learn, significant changes are coming.

I think the day will come when Google can factor content accuracy into its decisions about what to show to searchers. However, we are not there yet.

Some ideas for WalletHub

Let’s end this on a helpful note! I have worked with WalletHub in the past, so they know that my recommendations are to provide original, insightful, and helpful information. This is not an easy task when you are competing with well-known brands for competitive conditions.

I would argue that if we removed WalletHub’s pages from these SERPs, what remains on other sites would fulfill the user’s intent. For a searcher who will likely be satisfied with a list from a brand known for credit card recommendations, there is little clear and obvious information that can be gleaned from Credit Karma and WalletHub rankings for these terms. I suspect that Google’s systems have determined that as they include more pages with lists of map recommendations, users tend to only engage with one or two pages.

You may notice that the rest of the results that rank below the SERP features shown serve different user intents – for example, articles designed to help people make decisions and other forms of content.

I’d like to see WalletHub experiment with different types of content on this site that a searcher would want to engage with, even if they’ve already read what’s ranked ahead of them.

Here is some homework for you. CTRL-F through the Guidelines for Evaluators and look at all the places where Google mentions “Original.”

Original doesn’t just mean that the content is unique. It must offer searchers something uniquely valuable that searchers find helpful enough to consistently choose it. Think of search as a library. You ask the librarian, “Can you give me some books to help me decide which rewards card to buy?” The librarian says, “Okay, here’s one from Nerdwallet with a list of card recommendations. And here’s a similar list from Credit Karma – they’re known for recommending things like this… and if you need information to help you choose, here’s this news article…” and so on.

(You can do exercises like these for your SERPs with my workbook, Put Yourself in the Role of a Searcher.)

One could argue that WalletHub does have original content because they have a proprietary score, more accurate recommendations, and even more information on the site than NerdWallet. What matters, however, is the information the searcher believes they will gain in the few seconds they spend landing on a page. I don’t think there’s enough here to grab a searcher’s attention so that they would reply to the librarian, “Ah yes! This book from WalletHub is the one I was looking for.”

Find out more about it here Information Gaining Patent I talk about that in the video.

WalletHub’s site offers a very similar experience to what Google already rates NerdWallet and CreditKarma. In a time where searcher actions play such a large role in rankings, this will be the case Really It’s difficult to outperform more well-known brands while creating pages similar to what they offer.

While it’s possible that my theory will bear fruit and Google will begin to recognize which sites are more likely to be factually accurate and unbiased, perhaps WalletHub will improve. But I think the key to improvement is figuring out how to consistently get users to:

- Click on the result when it appears in the search results

- They will be more satisfied with this result than the other options presented to them

I’d love to see WalletHub experiment with completely different offerings, such as:

- A video featuring credit card experts sharing their thoughts on current offers and trends.

- A quiz or tool to help people decide which card is best for them. (Attempt Claude Sonnet takes a quiz or interactive tool!)

- More research to help people make decisions. But it needs to be presented in a way that people are comfortable engaging with.

- Somehow display options in a different format. I would experiment with smaller tables, charts and other graphics.

- Doing more to understand and fulfill a seeker’s intent. When I’m researching rewards cards, I don’t just want a list of cards, I want to really quickly know what I’m paying and what rewards I can get and what the risks are. (I think the Credit Karma explanations are better suited to quickly answer these questions. Wallethub gave me a lot of text to read that was harder to skim.)

- This makes it easier to skim the page and decide which card you want.

Ultimately, however, it becomes increasingly difficult to get a site like this to the top through “SEO” unless you can convince people that you are the result they wanted to see.

I hope you found this helpful!

Mary

PS: I will make more site review videos. Here’s more information on how I can create a video looking at your site from a searcher’s perspective, and here’s my workbook if you’d like to go through these exercises yourself.