As I have helped companies that were heavily impacted by the Fall of Insane Algorithm Updates in 2023, I once again heard from several website owners who were at their peak traffic levels just before the disaster. I’ll share more about this soon, but it’s something I’ve seen for a long time. And now that we’ve read Google’s statement about Navboost and user engagement signals from the recent antitrust case, several ideas come to mind.

When supporting businesses affected by major algorithm updates (e.g. major core updates), it is extremely important to create a so-called “delta report” to identify the most common search queries and landing pages that occur during them Updates have been deleted. Delta reports can be powerful and help site owners determine whether a decline is due to relevancy adjustments, intent shifts, or general site quality issues. And if quality was the issue, then examining the top search queries and landing pages can often lead to important insights. And these insights could help identify low-quality content, thin content, low-quality AI content, user experience barriers, aggressive advertising, a misleading or aggressive affiliate setup, and more.

This also got me thinking again about “quality indexing,” which I have covered many times in my posts and presentations on important algorithm updates. This ensures that your highest quality content is indexed while ensuring that low quality or thin content is not indexed. This relationship is important. Because we know that Google takes every indexed page into account when evaluating quality. Google’s John Mueller has explained this several times and I’ve shared these video clips over the years.

But are all things qualitatively the same? In other words, if you find a lot of low-quality content on your site that doesn’t show up often in search results, that’s the same as lower-quality content (or unhelpful content). clearly visible in the search results?

John Mueller’s comments on removing low-quality content have changed slightly over the years in this regard. I noticed this over time and it always piqued my curiosity. In addition to explaining that an indexed content can be evaluated for its quality, John explained that he will focus on the essentials first clearly visible and of low quality. I’ll explain in more detail why this makes perfect sense (especially in light of new information from the latest antitrust case against Google).

Below, I’ll briefly cover some background on user engagement signals and SEO, then address some interesting comments from Google’s John Mueller over the past few years about removing low-quality content, and then link those to Google’s recent statement about using user engagement signals to help with rankings (e.g. Navboost).

First, let’s take a look at history and discuss user engagement signals and their impact on SEO.

User engagement signals and SEO, as well as Google’s uncanny ability to understand user satisfaction:

In the recent antitrust case against Google, we learned a lot about how Google uses user interaction signals to influence rankings. For example, Pandu Nayak from Google talked about Navboost, how Google collects click data for 13 months (previously 18 months) and how this data can help Google understand user satisfaction (satisfied search users).

Even though the discovery of Navboost stunned a lot of people (rightfully), there were always signs that Google was doing something with user engagement data from a rankings perspective. No one really knew for sure, but it was hard to ignore the concept while dealing with many sites going down over time due to major algorithm updates (especially major core updates).

For example, I wrote a post about it back in 2013 misleading, ominous increase in traffic before a big algorithm strikes. Note that Search Engine Watch has issues with older posts, so I’ve linked to the amp version of my post there (which seems to be fixable). You can also view a PDF version of this post if the Amp version doesn’t work for some reason. I wrote this post after many companies reached out to me about big Panda hits, explaining that they had just experienced their highest traffic ever until they crashed with the next update.

To me, it seemed like Google was gaining so much information based on the way users interacted with the content in the SERPs that it ultimately contributed to the decline. In other words, Google could find large numbers of dissatisfied users on these sites. And then they boomed.

Here is a quote from my SEW article:

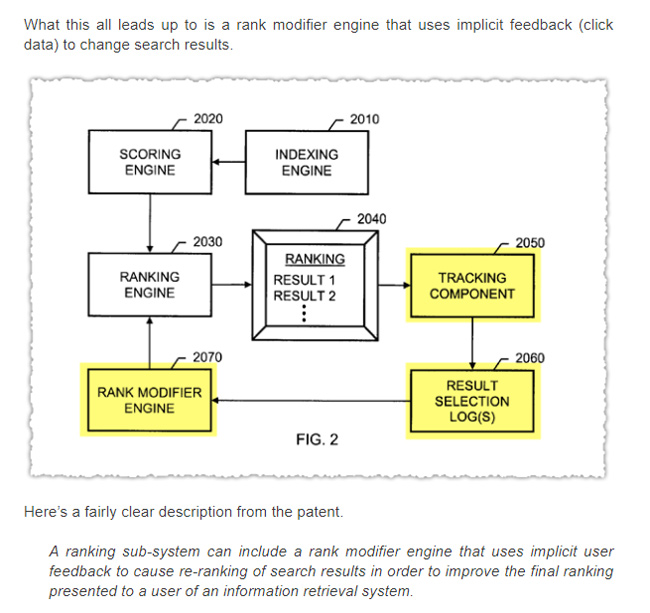

AJ Kohn also wrote an article about it in 2015 CTR as a ranking factor. AJ has also worked on many websites over the years and had a serious suspicion that user engagement signals played a role in rankings. Here is a screenshot from AJ’s post related to implicit user feedback.

I’ve also written extensively about the danger of low dwell time and how implementing an adjusted bounce rate could help site owners get a better sense of content that doesn’t meet or exceed user expectations. This post is from 2012. And I expanded on this idea with another post in 2018 about using multiple tracking mechanisms as an indicator of user satisfaction (including adjusted bounce rate, scroll depth tracking, and dwell time on the page). Note that this was for GA3 and not GA4.

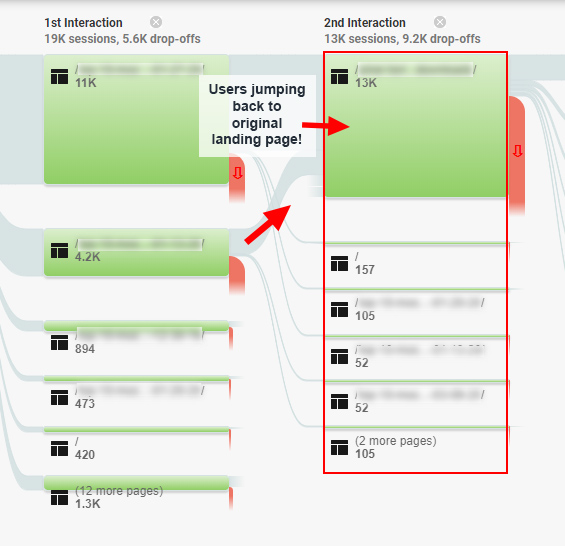

And as a great example of how user engagement issues can impact SEO, there’s a case study I wrote in 2020 about the “SEO engagement trap” where I found users going in circles on a site running around, and I’m sure they’ll eventually come back to the SERPs, for better answers. This site has beaten through a major core update and later recovered after many of these issues were fixed. Yes, there are more negative user interaction signals for Google to evaluate.

Here’s a screenshot from this post showing behavior flow reports for frustrated users:

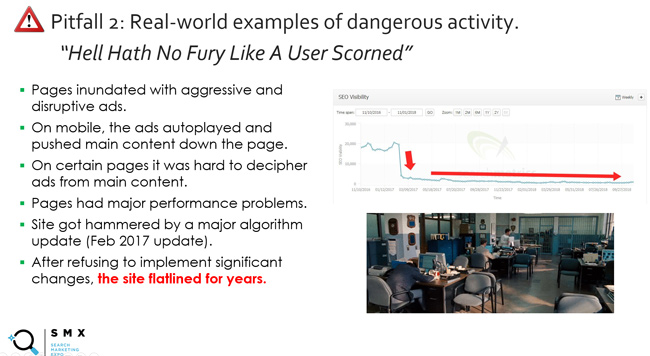

And there’s the slide (and motto) I’ve long used in my presentations about major core updates, called “Hell hath no fury like a user scorned.” This affects more than just content quality and includes aggressive advertising, user experience barriers, and more. Basically, you don’t want to annoy users. You will pay a high price. And by the way: How can Google better understand angry users? Well, by tracking user engagement signals like low dwell time (someone returns to the SERPs quickly after visiting a Google site).

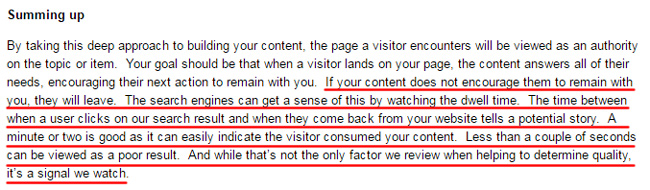

And on the topic of dwell time, one of my favorite quotes comes from years ago when Bing’s Duane Forrester wrote a blog post explaining how a short dwell time could impact search visibility. Duane no longer works for Bing, but was Bing’s Matt Cutts for several years. In this post, which has since been deleted (but can be found via the Wayback Machine), Duane explained the following:

“If your content doesn’t encourage them to stay with you, they will leave. The search engines can get an idea of this by observing the time spent on your site. The time between a user clicking on our search result and returning from your site tells a potential story. A minute or two is good as it can easily indicate that the visitor has consumed your content. Less than a few seconds can be considered a bad result. And while this is not the only factor we consider when determining quality, it is a signal we keep an eye on.”

Then in another post on SEJDuane continued:

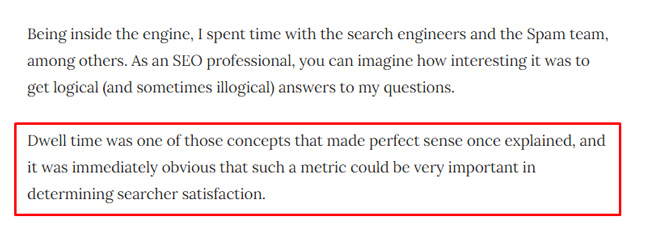

“Dwell time was one of those concepts that, once explained, made perfect sense, and it was immediately clear that such a metric could be very important in determining searcher satisfaction.”

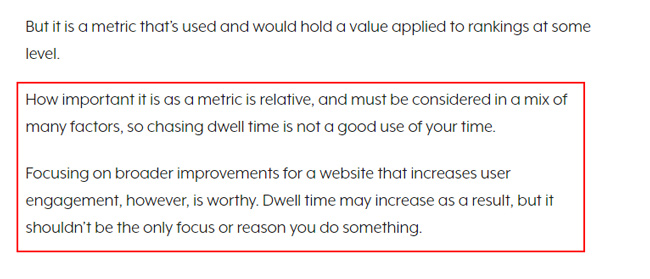

And finally, Duane explained that this was considered “a mix of factors,” which is important to understand. He explained that chasing dwell time was a bad idea. He explained that you should concentrate on doing broader improvements for a website that increases user engagement (and time spent may increase due to this work). This is also why I believe the “kitchen sink” approach to renovation is an effective way. Address as many quality issues as possible and great things can happen down the line.

Note that you shouldn’t focus on the time Duane spent on a “short engagement” in 2011. I’m sure this is one of the reasons the post was removed. For some types of content, a shorter time span is perfectly fine from a user engagement perspective. However, it is extremely important to understand the key point about low time spent, especially when viewed across a lot of content on a website. That was then, and it certainly still is today.

The main point I’m trying to get across is that user interaction always seemed to be a factor somehow…we just didn’t know exactly how. Until Navboost was revealed. I’ll share more about it soon.

Why “Highly Visible” Matters: Comments from Google’s John Mueller.

OK, now let’s take a closer look at what I call “quality indexing.” Again, ensure that only your highest quality content that can meet or exceed user expectations is indexed, while also ensuring that low-quality or thin content is not indexed.

Google has stated over time that all indexed pages are taken into account when evaluating quality. I’ve covered this often in my blog posts and presentations on major algorithm updates (especially when it comes to major core updates).

Here John from 2017 explains the following:

But over time, I noticed a change in John’s response when asked if he wanted to remove lower quality content. John began to explain that he would focus on content that was “highly visible” and of lower quality. I heard this in several of Search Central’s Hangout videos, and he also talked about it in an interview with Marie Haynes.

Here are some examples:

First, John explains here that not all things are equal in content. Google will try to find out the most important pages and focus on them:

Here John explains that Google can understand the core content of a website, where visitors go, etc.

And here John explains that if you know people are visiting certain pages, it would make sense to target these lower quality pages first (so people can stick around):

So why does this matter? Now that we know more about how Google uses user engagement signals to influence visibility and rankings via Navboost, this statement from John makes a lot of sense. The more user interaction data Google has for query and landing page combinations, the better Google can understand user satisfaction. If a page is low quality and not visible, Google has no user engagement data. Of course, Google can use other signals, natural language processing, etc. to better understand content, but user interaction signals are amazing for understanding searcher satisfaction.

Note, before I continue, I wanted to make it clear that I’m going to tackle it anyway all Content quality issues on a website (even those that are not “highly visible”), but I would start with the most visible ones. This is an important point for site owners struggling with a sharp decline in comprehensive core updates, helpful content updates, or review updates.

Google’s antitrust procedures and user interaction signals influence rankings.

I’ve mentioned Navboost several times in this post and wanted to address it in this section. I won’t go into much depth as it’s been covered in a number of other industry posts recently, but it’s important to understand.

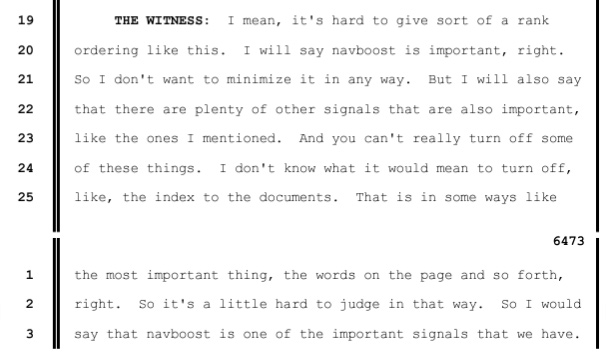

In Google’s antitrust deposition, Pandu Nayak explained its use of Navboost, a system for leveraging user interaction data, to help Google understand user satisfaction. Essentially, it allows Google to learn from its users over time and adjust rankings based on those insights. Navboost is of course not the only factor at play, but it is an important factor in determining rankings.

Here Pandu Nayak talks about the importance of Navboost:

“I mean, it’s difficult to put a ranking like that. I would say Navboost is important, right. So I don’t want to downplay it in any way. But I also want to say that there are many other important signals like the ones I mentioned. And some of these things you can’t really turn off. I don’t know what it would mean to disable the index to the documents, for example. That’s the most important thing in a way, the words on the page and so on, right. So it’s a little difficult to judge it like that. So I would say Navboost is one of the important signals we have.”

So when pages are highly visible for a search query, Google actively collects user engagement signals for those URLs and query combinations (currently collecting 13 months of click data). And Navboost can use these user engagement signals to help Google’s systems understand user satisfaction (including dwell time, long clicks, scrolling, hovering, and more).

And on the other hand, if pages aren’t highly visible to search queries, Google can’t use those user engagement signals… or not enough signals to be effective. That doesn’t mean Google can’t understand page quality, but it will lack it real user signals for these query and landing page combinations. And that is incredibly important.

Note: If you want to learn more about user engagement signals from the antitrust case against Google, AJ Kohn wrote one Great post with this information based on Pandu Nayak’s statement. I recommend reading AJ’s post if you haven’t already as it covers more about Navboost, user engagement signals, CTR as a ranking factor, etc.

Here is a slide from a Google presentation that was used as evidence during the trial. It highlights the importance of user interaction signals in determining search satisfaction. Keep in mind that this slide is several years old. It’s about the “source of Google magic”:

And don’t forget about site-level quality algorithms:

Again, I don’t want to overload this post with too much information from the antitrust case, but my key point is that “highly visible” content in the SERPs can gain a lot of user interaction signals that Google can use as a factor for rankings. It is also possible that Google can also use this data in aggregate form to influence quality algorithms at the website level. And these site-level algorithms can play a big role in how sites perform during major core updates (and other important algorithm updates). I’ve covered this for a long time too.

What this means for you. My recommendation for website owners affected by major algorithm updates:

For website owners heavily impacted by major algorithm updates, it’s important to take a step back and analyze an overall website from a quality perspective. And as Google’s John Mueller has previously explained, “quality” is more than just content. This also includes UX topics, the advertising situation, the way it is presented and more.

And now that it has been confirmed that Google is using user engagement signals to influence visibility and rankings via Navboost, I would put “high visibility” URLs at the top of the list when deciding what to target. Yes, you should address any quality issues that come up, even those that may not be as clearly visible, but I would prioritize the URLs that were most visible leading up to a major algorithm update. Yes, creating a Delta report is even more important now.

Summary: Cover all low-quality content, but start with what is “highly visible.”

Over the years, I have always explained that content must meet or exceed user expectations depending on the query. With the latest testimony from the antitrust case against Google, we now have clear evidence that Google is indeed using user interaction signals to adjust rankings (and potentially influence site-level quality algorithms). As a website owner, don’t allow low-quality, thin, or unhelpful content to remain in the SERPs for users to interact with. As I’ve seen with major algorithm updates over the years, this won’t end well. By all means address any potential quality issues on a website, but don’t overlook what’s clearly visible. This is a good starting point when it comes to improving the overall quality of the website.

GG